Launching an Unmoderated Study in User Interviews

Now that you understand the basics, features, and integrations for unmoderated research in User Interviews, you’re ready to launch an unmoderated study.

In this lesson of Unmoderated Research with User Interviews, you’ll learn best practices for launching an unmoderated study, including:

- Tips for choosing the right sample size

- How to create effective screener surveys

- Best practices for writing invite emails and task instructions

📹 Prefer watching to reading? This content is available as both an article and a video. Watch our Strategic Customer Success Manager, Christy Banasihan, take you through the content in the video below or keep reading to dive in!

How to create an unmoderated project in User Interviews

When you create a new study with User Interviews, you’ll be prompted to tell us what kind of study you’re running. Select “unmoderated task” at this step to pre-populate your project builder with the relevant templates, making it easy for you to set up your unmoderated study and communicate the right instructions to your participants.

Then, you’ll simply need to follow the prompts throughout the rest of the builder to set up your project. The steps are similar to those you may have encountered when setting up a moderated project such as a 1-1 interview. However, there are a few best practices that we recommend specifically for unmoderated studies so we’ll provide more detailed tips in the next sections.

💰 Need help choosing an incentive for unmoderated studies? Try our UX Research Incentive Calculator for a personalized, data-backed recommendation.

Considerations when choosing the right sample size

The right sample size for an unmoderated test depends on a number of factors, including:

- The type of test: Quantitative studies typically require more participants than qualitative. Nielsen Norman Group recommends about 5-10 participants for qualitative research and 40+ participants for quantitative research—but there’s no hard-and-fast industry consensus around these numbers, which is why it’s important to carefully consider your sample size on a case-by-case basis.

- The potential impact of the test: For studies with higher stakes, you’ll probably want to recruit more participants to ensure accurate results.

- The number of testing rounds: If you plan on doing multiple rounds of tests, you can get away with recruiting fewer participants for each test.

- The complexity of the task: More complicated tasks have a higher likelihood of skewed results, so you can get more accurate data by recruiting more participants.

- The product development stage: The earlier you are in the development cycle, the more severe and obvious errors tend to be, so you can usually get away with recruiting fewer participants for early-stage studies.

Ultimately, the right sample size varies and will need to be determined on a case-by-case basis. For qualitative research, check out our Qualitative Sample Size Calculator to help you determine the right number of participants to recruit. When in doubt, consult your CSM for advice on choosing the right sample size.

Tips for writing screener surveys for unmoderated tests

Creating a screener survey for your study is always optional, but researchers often find it helpful to ensure participants meet the qualifying criteria for their study.

1. Don’t give away too much information.

Don’t reveal too much about your study or goals in the screener. Instead, use questions to filter for the right participants without giving away your intentions.

- Avoid giving away your participant selection criteria.

- Use indirect questions to identify qualified respondents.

2. Avoid too many “yes/no” questions.

Broad, open-ended questions reduce bias and improve data quality.

- Poor example: "Do you use an iPhone?"

- Better example: "What smartphone do you own?"

- iPhone (Accept)

- Android (Reject)

- I don’t own a smartphone (Reject)

3. Add a personality question.

Include a short-answer question to learn more about your candidates’ personality or communication style.

Examples:

- “What’s your favorite app, and why do you love it?”

- “If you could have one superpower, what would it be?”

4. Use skip logic.

Streamline your survey by showing follow-up questions only to relevant respondents.

For example, for the question "What smartphone do you use?" you might use the following responses and skip logic:

- iPhone (Continue to next page)

- Android (End survey)

These tips ensure your screener collects honest, high-quality responses while keeping the process efficient.

Tips for writing invite emails (Hub only)

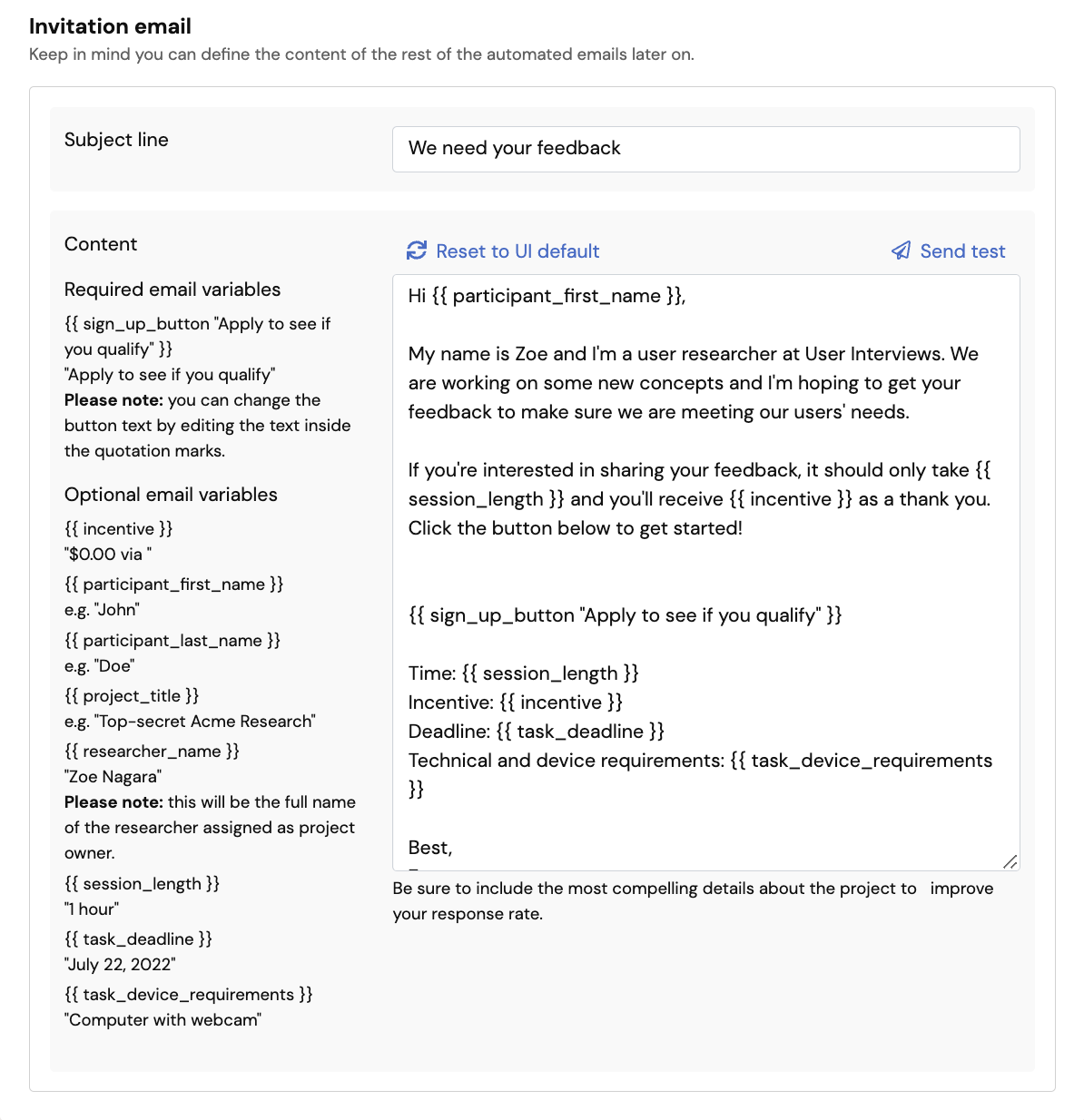

For Hub studies, you can write an invite email to send directly to participants in your Hub database.

Note that we provide a default email invitation for unmoderated studies that you can use as a starting point. This is a dynamic template that will automatically pull in key information about your study that you have inputted in the project builder, including information like the incentive amount, session length, task deadline, device requirements, and other key things participants will need to know.

You can customize any of the copy within the email so it provides the most compelling details about your study to those who are considering applying. We strongly recommend customizing the copy on the sign up button so it aligns best with what the participant will experience — pay attention to how you’ve configured your study here.

You can customize the button copy by editing the text inside the quotation marks. By default, it will read “Apply to see if you qualify” but you can simply overwrite this text.

Here are some examples of calls-to-action you may use in various study configurations:

- If your study includes a screener survey, try “Apply to see if you qualify” or “Take the screener survey” — This language will help set expectations for applicants that there is an application process.

- If your study doesn’t include a screener and you’ve selected automatic approval, try “Go to task” — This tells participants that they can take the task right away, by clicking on the button they will launch their session.

- If your study doesn’t include a screener and you’ve selected manual approval, try “Sign up to participate” — This indicates that there is a sign up form they’ll need to fill out as the first step.

You can send a test email to yourself at any time to preview your changes, and you can also reset to the default if you want to start over.

Tips for writing task instructions

On the “Task details” page, there is a field for you to provide task instructions to your participants. This is an open text field you can use to help prepare participants and set their expectations for the task they’re about to complete. The instructions you provide here will dynamically display on task summary screens and emails sent to your participants.

Here are some examples of task instructions you may wish to include:

- Login or installation instructions: Does your task take place in another application that will require the participant to create an account or download and install software? If so, does that application provide participant-facing documentation that you could link to as a reference?

- Technical requirements: Does your task require the participant to use their webcam, speakers, microphone, screenshare, or a specific browser or device? While this information is included elsewhere on confirmation screens, it doesn’t hurt to remind participants to check their tech set up before attempting the task.

- Task instructions: Set expectations for what the participant will be expected to do once they open up your task. A simple and brief bulleted list is best here — you don’t need to provide all the details if the task itself contains directions.

- Tips for participating: Include anything else you’d like your participants to keep in mind as they complete your task. Examples may include finding a quiet space, minimizing distractions, or providing clear and thoughtful answers.

💡 While key study details such as task length and device requirements are already included in participant communication, this is your opportunity to add clarification and color to really set up your participants for success. Remember, they won’t have the benefit of a moderator guiding them through the session, so if there’s anything you’d like them to know, now’s your chance!

Keep learning

Tracking Participant Progress for Unmoderated Studies